Prof. Dr. Kai Rannenberg

The future of data security with digital media - multi-sided security as a necessity and enabler

Media has become predominantly digital in recent years. On the one hand, this has an impact on how they can be secured and, on the other, on which security objectives they support and how well. This article therefore first looks at the topic of security, as it can be understood in very different ways, and introduces the area of multi-sided security. This forms the basis for a closer look at securing digital media, which in turn motivates an analysis of the approach of security through hunting and gathering. The impact of this approach on data protection and privacy suggests an introduction to approaches for the reduction and, above all, user control of data flows. A conclusion, which also briefly addresses the topic of media literacy, concludes the article.

Security

Security has several facets. For the topic of the future of data security with digital media, it makes sense to focus on data security, as well as on the aspect of protection against intentional attacks, which is known as security. Here, too, there are several perspectives. I have selected two:

A first perspective shows a very human dichotomy: on the one hand, there is one's own privacy and the protection of one's own (immaterial and material) values; on the other hand, there is commitment, which one wants to achieve by gaining the trust of partners and possibly also transferring values and money.

A more technical perspective leads to a division into four sub-areas: Confidentiality, because information should not reach the wrong people. Integrity, because information should not be falsified. Availability, because resources (especially information resources) should not fail. Accountability, because actions should not be unaccountable.

In relation to people, there is therefore a dichotomy, and the technical goals are not without contradictions either. Accordingly, safety is not a goal that can simply be achieved by going faster, higher and further. You need a combination of technical, organisational and legal measures tailored to each individual case.

Security must be multi-faceted because the interests of all parties involved must be taken into account (Rannenberg, Pfitzmann, Müller 1996; Rannenberg 2000). In the telecommunications sector, for example, these are network operators (such as Deutsche Telekom) and users, but often also service providers (such as mobilcom-debitel), which act as intermediaries between the network operators and the users. Even in this very simplified scenario, there are examples of conflicting interests: For example, users do not want to pay for services that they have not even used; conversely, network operators want to be paid for services provided and ensure that they are not cheated. However, the situation is becoming even more complicated, especially due to the convergence of telecommunications and media services, for example on the Internet. This adds many more players and stakeholders. Accordingly, many different parties must be taken into account and their interests must be balanced and protected. This also places considerable demands on the technology used, which are not always met.

Securing digital media

If we look at the security of digital media along the lines of the technical structure just introduced, the following becomes apparent:

Availability and broad distribution are comparatively easy to realise in the age of the Internet; at the very least, restrictions and censorship are much more difficult to enforce than they used to be. At the same time, it is more difficult to establish confidentiality when using media, as activities on the Internet, including the simple use of contributions, are much easier to log than, for example, with traditional radio-based broadcasting, the use of which only leaves traces in exceptional cases.

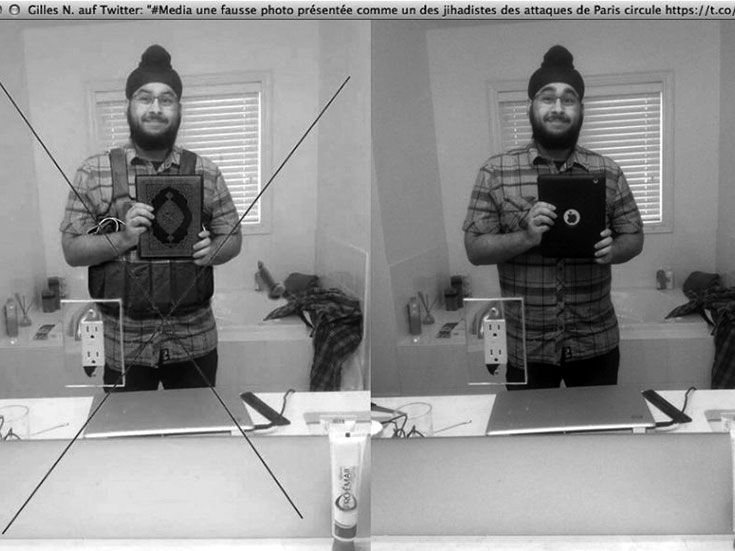

The integrity and authenticity of digitally presented media content is difficult to prove, but easy to compromise. This often goes unnoticed or is only realised (too) late. Fig. 1 shows one of the most recent examples.

On the left is a photo that circulated on the internet on 14 November 2015 after the attack in Paris. On the right is the original. Someone who is good at Photoshop has turned the iPad used to create the self-portrait into a Koran and "put" a suicide bomb belt on the person portrayed. The manipulated image very quickly spread on the internet as a supposed self-portrait of one of the assassins and was also disseminated as an authentic image by media that were considered to be reputable. The example shows how quickly a photo manipulation can lead to misjudgements in a certain climate and be spread further, even if it has errors that could have been noticed (e.g. the sockets in the bathroom mirror are not French). It turns out that it is difficult to distinguish between original and fake.

In general, it is not possible to tell whether digitised images or other digital files are authentic or manipulated simply by looking at them (in this respect, the left-hand image in Fig. 1 is still a simple case). The problem of ensuring the authenticity of data or information has proven to be a highly complex one. There are advances such as digital signatures, time stamps and other certificates, but they require very complex infrastructures. Ultimately, they only work to the extent that they shift trust to the entity that has signed, stamped or certified the file in question. This means that viewers have to establish trust threefold or check it accordingly:

Viewers must trust the entity that has signed, stamped or certified the respective file, or they must check this.

Viewers must trust or verify that or whether the signature, stamp or certificate of the file in question really originates from the entity from which it supposedly originates.

Viewers must also trust or check their own device that interprets the digital stamps, signatures and certificates.

Security through hunting and gathering

Problematically, the authenticity of digital content, i.e. the question of whether a document is genuine, is often confused with the identification of those presenting the content of the document: "If you know the person showing you the document, then hopefully it is genuine." Following this confusion, more and more identifying information about users is being collected. This data is usually not conclusive, but can point to individuals. What's more, users already leave a lot of traces on the internet anyway, because it is technically easy to store data and people often like to do so.

It is similar with identity management: because it is technically easy in the age of comprehensive networking to ask a third party about someone who is making an enquiry, it is being done more and more. Traditionally, ID cards and authorisations can be checked locally. This is not new. When someone arrives at a hotel, wants to check into their room and presents their ID card, the card is usually checked by the hotel staff on site. A further enquiry, for example with the issuer of the ID card, only takes place in very rare exceptional cases. The situation is often different on the internet: when someone logs on to an internet portal, they are often asked elsewhere (e.g. on Facebook or Google), for example whether the account is known and the password entered is correct. This is called calling home to the "identity provider". It is similar for the upcoming European eID system (EU 2014). In many cases, the issuer will be contacted, and the enquiries will be routed via an intermediate computer (gateway) for each EU member state.

The problem with this is that those who receive the requests collect a detailed profile of the user's internet activities, namely a list of the websites that the respective users log on to. This is highly interesting for Facebook and Google, for example, because they then know more precisely which of their users are doing what else on the Internet and can customise their services accordingly. However, once the data is available, it can of course also be used for other technical purposes.

Because it is therefore difficult to check electronic documents (such as ID cards, but also media content) for authenticity on their own and it is easy to establish communication links for automated queries, a lot of data, some of it very sensitive, is generated and transmitted.

Data collection and data protection/privacy

There are now many examples of how it has been possible to draw conclusions about individuals from data that was initially thought not to be personally identifiable. In a very early example, Latanya Sweeney linked published medical data of Massachusetts state employees to a public electoral roll and identified the governor and his illnesses (Sweeney 2002). In other examples, anonymous Netflix users were identified via their film ratings (Narayanan, Shmatikov 2008) or anonymisation algorithms were overcome to protect users of networked cars (Troncoso et al. 2011). Mobility data can be derived from mobile communication data: If the location of users is documented every hour with the precision of a mobile phone cell, four values are enough to uniquely identify 95 per cent of users (de Montjoye et al. 2013). In the case of credit card transaction data, four randomly selected combinations of purchase date and transaction were sufficient to uniquely identify 90 per cent of customers. If a purchase price range is added, 95 per cent can be identified (de Montjoye et al. 2015). Women were easier to identify due to their more individual shopping behaviour.

In light of current events, here is another related example of data retention of location data in mobile phone networks. Location data in mobile phone networks is personal data that is not normally generally accessible. However, in the interests of transparency, Malte Spitz, a member of the German parliament, asked for "his retained" data in 2009, when data retention was permitted, and analysed it in the media together with Zeit Online (Zeit Online). It is possible to see very precisely where he has been and when, and how he has moved around. Such data is not usually generally available, but in the last 30 years, a lot of data that was supposed to remain under lock and key has at some point become generally available or public. In this respect, it cannot be ruled out that this will also happen with such data, even if it is only by someone stealing the data from the telecommunications company.

Reduction and user control of data flows

In view of this situation, a reduction in data flows and more control of data flows by the users affected by them is necessary. Examples of relevant strategies are

Making information and communication as unobservable and anonymous as possible;

Allowing users to decide which information they want to disclose for which services, for example by means of partial identities (ISO/IEC 24760:2011);

limiting identification and authorisation control to the information that is really necessary, as practised by the ABC4Trust project, for example, in which users can determine their own level of identification and convert digital IDs themselves accordingly (ABC4Trust; Rannenberg, Camenisch, Sabouri 2015);

making communication options that are protected against interception more widely available.

The following measures are recommended to make the use of information and communication systems as anonymous and unobservable as possible:

Broadcasting and anonymous payment: The Internet offerings of public broadcasters, such as Radio Bremen, are often attractive and valuable, but the original broadcasting in the broadcasting system (terrestrial or via satellite), is still important because it is very difficult to determine that a user is receiving the respective programme. The Internet has not yet been able to offer this type of simple (almost) trackless media usage. In this context, anonymous payment is particularly important if media usage is to be billed individually. Anonymous payment on the Internet is not yet as advanced as is often assumed; the much-discussed Bitcoin system in particular is not really anonymous.

Several systems on the Internet help to avoid data traces on the Internet, such as the Tor network (http://tor.eff.org), the Java Anonymous Proxy (JAP, anon.inf.tu-dresden.de), the Mixmaster system (http://mixmaster.sourceforge.net) and also the Cookie Cooker service (www.cookiecooker.de), which provides disposable/throwaway e-mail addresses.

Search engines that manage without storing search queries and searchers are ixquick.com and duckduckgo.com in particular.

Many protection measures should also be required by law to be offered at generally affordable conditions, such as secure encrypted communication. The Universal Service Directive (EU 2002 ff.) on the provision of electronic communications services within the EU, which is currently being revised, lends itself to this, as it regulates a whole range of obligations and rights, for example: obligations regarding the provision of certain mandatory services (universal services), the rights of end users and the corresponding obligations of companies that provide publicly accessible electronic communications networks and services. So far, three things, among others, have been regulated: ISDN-type telephone services at affordable prices, the change of provider within one working day, taking the telephone numbers with them, and the availability of the emergency number 112. For example, encrypted communication (e-mail, voice, etc. ), secure end devices (especially a trustworthy affordable smartphone) and unobservable access to the Internet would need to be added.

Conclusion

Data security is not a suitable substitute for media literacy. On the contrary: knowledge of information and communication security or insecurity must be part of media literacy. If users and their data are not protected, they will not treat other people's data well either. There are many cases in which data has ended up in unauthorised hands because the people who were officially responsible for handling it did not understand that the data was sensitive: In England, several million health records were once burnt onto CDs by the people in charge and sent by normal post without any further protective measures. The CDs have still not been recovered.

On data retention: Monitoring normal users in everyday situations is more likely to create mistrust than trust. Government action should be geared more towards making more opportunities for protected and unobservable communication affordable. It must not be the case that someone who wants to use protected and unobservable communication can no longer participate in social life because of this. Some kind of basic provision is urgently needed here.

Further reading

ABC4Trust (n.d. ): www.abc4trust.eu

EU (2002): Regulatory framework for electronic communications. eur-lex.europa.eu/legal-content/DE/TXT/ 24 May 2016).

EU (2014): Regulation No 910/2014 of the European Parliament and of the Council of 23 July 2014 on electronic identification and trust services for electronic transactions in the internal market and repealing Directive 1999/93/EC. eur-lex.europa.eu/legal-content/DE/TXT/ (accessed 24/05/2016).

ISO/IEC 24760-1 (2011): "A framework for identity management. Part 1: Terminology and concepts". standards.iso.org/ittf/PubliclyAvailableStandards/index.html; developed by ISO/IEC JTC 1/SC 27/WG 5: Identity Management and Privacy Technologies; www.jtc1sc27.din.de (accessed 24 May 2016).

Mayer, Ayla (2015): Photo manipulation: how a Canadian Sikh became a Paris attacker online. In: Spiegel Online, www.spiegel.de/netzwelt/web/paris-anschlag-per-photoshop-vom-journalisten-zum-paris-attentaeter-a-1063090.html (accessed on 16 November 2015).

de Montjoye, Yves-Alexandre/Hidalgo, César A./Verleysen, Michel/Blondel, Vincent D. (2013): Unique in the Crowd: The privacy bounds of human mobility. In: Scientific Reports 3, Article number: 1376; Doi:10.1038/srep01376

de Montjoye, Yves-Alexandre/Radaelli, Laura/Singh, Vivek K./Pentland, Alex S. (2015): Unique in the shopping mall: On the reidentifiability of credit card metadata. In: Science, Vol. 347 No. 6221, pp. 536-539; Doi:10.1126/science.1256297

Narayanan, Arvind/Shmatikov, Vitaly (2008): Robust De-anonymisation of Large Sparse Datasets (How To Break Anonymity of the Netflix Prize Dataset). Proceedings of the 2008 IEEE Symposium on Security and Privacy. Oakland, pp. 111-125, Doi:10.1109/SP.2008.33

Rannenberg, Kai/Pfitzmann, Andreas/Müller, Günter (1996): Sicherheit, insbesondere mehrseitige IT-Sicherheit. In: Informationstechnik und Technische Informatik (it+ti); 38. Jg. H. 4, S. 7-10.

Rannenberg, Kai (2000): Multilateral Security - A concept and examples for balanced security. In: Proceedings of the 9th ACM New Security Paradigms Workshop 2000, pp. 151-162.

Rannenberg, Kai/Camenisch, Jan/Sabouri, Ahmad (eds.) (2015): Attribute-based Credentials for Trust - Identity in the Information Society. ORT, 978-3-319-14439-9 (Online)

Sweeney, Latanya (2002): k-anonymity: a model for protecting privacy. In: International Journal on Uncertainty, Fuzziness and Knowledge-based Systems No. 10 (5), pp. 557-570.

Troncoso, Carmela/Costa-Montenegro, Enrique/Diaz, Claudia/Schiffner, Stefan (2011): "On the difficulty of achieving anonymity for Vehicle-2-Xcommunication". In: Computer Networks No. 55 (14), pp. 3199-3210.

Zeit Online (2015): Verräterisches Handy, www.zeit.de/datenschutz/malte-spitz-vorratsdaten (accessed on 01.11.2015).